Partner POV | VMware Private AI: Democratize generative AI and ignite innovation for all enterprises

In this article

This article was written by Krish Prasad, Senior Vice President and General Manager of VMware's Cloud Infrastructure Business Group.

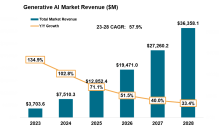

Generative AI is one of the biggest trends that will transform enterprises in the next decade. It holds immense potential by enabling machines to create music, amplify human creativity, and solve complex problems. Generative AI applications are diverse, including content creation, code generation, providing excellent contact center experience, IT operations automation, and sophisticated chatbots for data generation. As AI drives productivity gains and enables new experiences, many core functions within a typical business will be transformed, including sales, marketing, software development, customer operations, and document processing. At the heart of this wave in AI innovation are Large Language Models (LLMs) that process huge and varied data sets.

Source: 451 Research, part of S&P Global Market Intelligence – Generative AI Market Monitor 2023. © 2023 S&P Global.

Source: Gartner, Over 100 Data and Analytics Predictions Through 2028, 23 April 2023. GARTNER is a registered trademark and service mark of Gartner, Inc. and/or its affiliates in the U.S. and internationally and is used herein with permission. All rights reserved.

Challenges

While generative AI is a transformative technology, enterprises face daunting challenges in its deployment.

- Privacy: Enterprise data and IP is private and critically valuable when training large language models to serve the organization's specific needs. This data needs to be protected to prevent leakage outside the organizational boundary.

- Choice: Enterprises need to be able to choose the large language model (LLM) that best fits their generative AI journey and organizational needs. So access to a broad ecosystem and a variety of choices is essential.

- Cost: Generative AI models are complex and costly to architect since they are rapidly evolving with new vendors, SaaS components, and bleeding-edge AI software continuously launched and deployed.

- Performance: The demand on the infrastructure experiences a substantial surge during model testing and when data queries are executed. Large language models, by their very nature, usually necessitate the management of enormous data sets. Consequently, these models can place significant infrastructure demands, leading to performance issues.

- Compliance: Organizations in different industries have different compliance needs that enterprise solutions, including generative AI, must meet. Access control and audit readiness are vital aspects to consider.

VMware Private AI

VMware recently announced the launch of VMware Private AI. This architectural approach for AI services enables privacy and control of corporate data, choice of open source and commercial AI solutions, quick time-to-value, and integrated security and management.

With VMware Private AI, you get the flexibility to run a range of AI solutions for your environment—NVIDIA AI Software, open–source community repositories, and independent software vendors. Deploy with confidence, knowing that VMware has built partnerships with the leading AI providers. Achieve great performance in your model with vSphere and VMware Cloud Foundation GPU integrations. Augment productivity by eliminating redundant tasks and building intelligent process improvement mechanisms.

VMware's extensive network of partners encompasses industry leaders such as NVIDIA, Intel, and major server OEM manufacturers like Dell Technologies, Hewlett Packard Enterprise (HPE), and Lenovo. As the visual above showcases, VMware collaborates with various AI vendors and ML Ops providers, including Anyscale, Run:ai, and Domino Data Lab.

Benefits

- Get the Flexibility of Choice: Get the flexibility to run a range of AI software for your environment, including NVIDIA AI Enterprise, open-source repositories, or ISV offerings with the VMware Private AI. Achieve the best fit for your application and use case.

- Deploy with confidence: VMware Private AI offers generative AI solutions in partnership with NVIDIA and other partners, all of whom are respected leaders in the high-tech space. This enables you to securely run your private corporate data to do fine-tuning, run inferencing, and, in some cases, even training in-house.

- Achieve Great Performance: This solution supports NVIDIA GPU technologies and pooling these GPUs to extract great performance for AI workloads. The latest benchmark study compared AI workloads on VMware + NVIDIA AI-Ready Enterprise Platform against bare metal. The results show performance that is similar to and, in some cases, better than bare metal. Hence putting AI workloads on virtualized solutions preserves the performance while adding the benefits of virtualization, such as ease of management and enterprise-grade security.

- Augment Productivity: Leverage VMware Private AI for your generative AI models and maximize your organization's productivity by building private chatbots and enabling automation of repetitive tasks, smart search, and building intelligent process monitoring tools.

Top Use Cases

VMware Private AI solutions enable several use cases for enterprises by securely enabling large language models', fine-tuning, and deployment (inference) within their private corporate environment. Here is a description of the top use cases that enterprises can enable using these platforms.

- Code Generation: These solutions accelerate developer velocity by enabling code generation. Privacy in code generation is of utmost concern. With VMware Private AI solutions, enterprises can use their models without risking losing their IP or data.

- Contact centers resolution experience: VMware Private AI can significantly improve customer experience by improving the high-quality content, quality, and feedback contact centers provide customers with improved accuracy of responses.

- IT Operations Automation: Enterprises can significantly reduce IT operations agents' time by enhancing operational automation like incident management, reporting, ticketing, and monitoring using VMware Private AI.

- Advanced information retrieval: Platforms based on VMware Private AI can significantly help in employee productivity by improving document search, policy, and procedure research.

Exciting Options For Our Customers

VMware Private AI Foundation with NVIDIA: VMware and NVIDIA announced plans to collaborate to develop a fully integrated Generative AI platform called VMware Private AI Foundation with NVIDIA. This platform will enable enterprises to fine-tune LLM models and run inference workloads in their data centers, addressing privacy, choice, cost, performance, and compliance concerns. The platform will include the NVIDIA NeMo™ framework, NVIDIA LLMs, and other community models (such as Hugging face models) running on VMware Cloud Foundation. Stay tuned as VMware will share more information on this exciting platform which will be launched in early 2024. This announcement extends the 10+ year joint mission of helping customers reinvent their multi-cloud infrastructure. The companies partnered 3+ years ago to bring together VMware + NVIDIA AI-Ready Enterprise Platform to enable IT admins to deliver an AI-Ready infrastructure on which data scientists can deliver and scale AI and ML projects and help organizations scale modern workloads leveraging on the same VMware technology and toolset they're already familiar with.

VMware Private AI Reference Architecture: VMware has collaborated with our partners like NVIDIA, Hugging Face, Ray, PyTorch, and Kubeflow, to provide their customers validated reference architectures with our major server manufacturers Dell Technologies, Hewlett Packard (HPE), and Lenovo. The key components are:

- VMware Cloud Foundation– VMware Cloud Foundation, the turnkey platform for multi-cloud and modern applications, will provide the infrastructure layer for enterprises on which Generative AI models can be easily deployed.

- NeMo framework– One of the key components of this architecture is the NeMo framework, part of the NVIDIA AI Enterprise, an end-to-end, cloud-native enterprise framework to build, fine-tune, and deploy Generative AI models with billions of parameters. It offers a choice of several customization techniques and is optimized for at-scale inference of large-scale models for language and image applications, with multi-GPU and multi-node configurations.

- Major Server OEM Support– This architecture is supported by major server OEMs such as Dell, Lenovo, and HPE.

- Dell Technologies: Rethink the status quo with generative AI. The collaborative engineering of Dell Technologies, VMware, and NVIDIA delivers a Full Stack for generative AI in the enterprise. VMware industry-leading products and expert professional services help you unlock the value of your data faster without losing control of your intellectual property.

- Hewlett Packard Enterprise (HPE): HPE, VMware, and NVIDIA share decades of strategic innovation. The new HPE AI Inference solutions integrate VMware Private AI Foundation and NVIDIA AI Enterprise Software suite with new HPE ProLiant Gen11 systems to streamline AI deployment and accelerate customers' AI advantage.

- Lenovo: Leveraging the Lenovo ThinkSystem SR675 V3, VMWare Private AI Foundation, and NVIDIA AI Enterprise, Lenovo's newest Reference Design for Generative AI shows businesses how to deploy and commercialize powerful generative AI tools and foundation models using pre-validated, fully integrated, and performance optimized solution for enterprise data centers.